Within AI, there were a few “breakthrough” moments after I thought that there wouldn’t be any breakthrough moments any more.

After OpenAI’s GPT4 released, there was a period of around 6 months where I felt what we could get from AI kind of stagnated.

- I felt the quality of images created via Midjourney was kind bad

- I felt that AI was semi-useful for some kinds of very concrete coding questions (for example to give you a script that converts Celsius to Fahrenheit) but otherwise not so useful.

- I would sometimes use AI as a writing help, to check an article for grammar; or as a quick translator between English and Spanish.

But all in all I felt AI was a helpful tool, but not a revolution.

Then, in August 2024, Anthropic released a feature called Claude Artifacts which changed a lot for me.

In artifacts, instead of asking something and getting a text-based answer back, you would get actual rendered piece of UI back that you could see and play with.

In the above screenshot you can see the feature where you have the prompt on the left side, and then a rendered UI piece on the right side. You can also switch to the code as well.

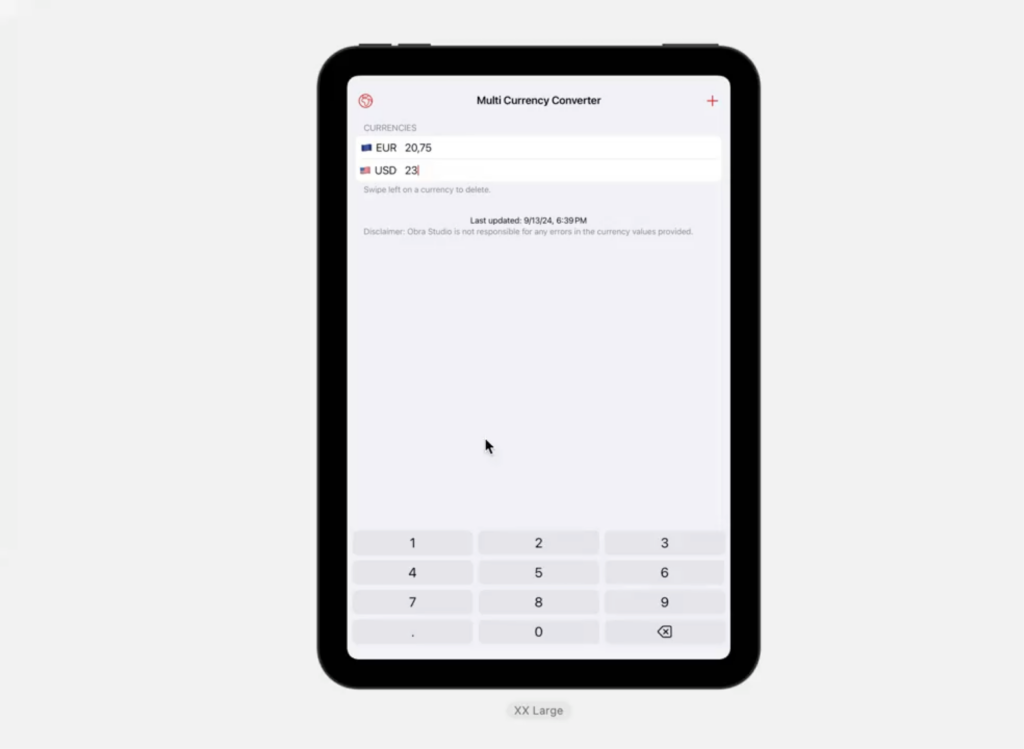

I started experimenting with both Claude and Claude Artifacts and also Cursor and created a first app, that was more or less 90% prompted.

It was called Multi currency Converter, it was a web app written in Svelte. This app converts currencies in more than 140 other currencies.

I started experimenting with how far I could go with Claude + Artifacts.

Turns out, I could go quite far.

The AI would help me with “boring“ tasks. After building the core functionality, where I connected to an external currency API for accurate rates, I easily got the app localized in more than 8 languages, and added dark mode.

At one point, I wondered if I could create a native app with Claude as well, and lo and behold, I got a working version of my multi currency converter app done for iOS and iPadOS in less than a day.

One that included all the features of the web app as well as offline support.

I learned to separate my conversations with Claude by topic. In a way it’s nice to work in one long prompt, because the prompt has context of all your previous questions, but after a while, it breaks down, and sometimes it’s better to work in multiple prompts.

All in all, I was very enthusiastic about Claude and artifacts, but there was another tool in the mix.

Around the same time news around a new code editor called Cursor started popping up. I started seeing this everywhere on Twitter and got super curious.

Cursor is a fork of VS Code that has an integrated panel to ask the AI questions about your code, in the context of a project. Its main feature is something called “composer” you can ask questions about your codebase. Cursor will then help to directly implement the changes.

So instead of the “traditional” approach of asking an LLM and copy pasting the code in your project, the code would change for you directly in the project itself.

This helped immensely when starting to build more complex apps and helped to scale the workflow.

Now, around December I got a new client, with the task to do an application design.

I started with the traditional way of setting up components in Figma. I was doing some detailed designs to experiment with the brand. It was kind of slow to design screens; and I didn’t really have much budget and time.

There was not much budget since it was a pre-seed startup. Not much time since I was also starting up my new business and agency.

I was driven by the idea of validating the ideas behind the screens. I didn’t just want to deliver the client design mockups and be done with it. I wanted to actually knew what people thought and move the product along with user feedback.

In a traditional “design offer” I would have written about going through the motions of designing screens for a few weeks, having weekly meetings about them, and moving towards a clickable Figma prototype.

This is something that we did a lot in the past with my previous agency Mono, we had an alternate offering where we moved towards a front-end based prototype instead of Figma prototypes to go towards a more life-like validation, without actually developing the app.

As a true startup client, the client had lots of feature ideas that kept coming in. Discussions were flowing on Slack and the team was on fire in quick calls. Ultimately what was left for me after those calls was that I saw that there were so many features to design, but that also the core idea behind the whole app was not validated.

I wanted to convince the client to start testing with users too. They were the ones who could tell him his ideas were valid. For this I felt he needed something interactive.

I combined all of the ideas I talked about before, and started making an interactive front-end prototype based on AI. On January 7th of this year, I remembered my success with Cursor and Claude and I started working on the prototype.

Four days later we had a clickable version of the application UI, with many interactive parts. By prompting Claude and separating out the parts of the UI that I needed I got to around 15-ish separate Claude conversations with UI pieces.

I would prompt for the UI parts and iterate on them independently in Claude first.

In Claude, you can also make projects, where you can give top level instructions that apply to all conversations within that project. One of my prompts was that all users of the app were Belgian, so whenever there would be output, like a data list, or a grid of people they should have Belgian names. These top level prompts help you to not have to reprompt for details in every individiual conversations.

I would take screenshots of what I was doing, and bring those back to Figma, to have a bird’s eye view of the project. So, I would have this UI piece in Claude, make a screenshot, put it in Figma, zoom out and see how all the screens connect (and connect them with arrows).

I would also sometimes use the Figma context to think more broadly about the app. In a prompt you are forced to give direct instructions. In a design app a different kind of perspective unlocks for me, where I can think more broadly, place comments and work on more abstract thinking. This output piece also helped a lot when having meetings with a bigger project team to get to a shared understanding.

So, how does it all tie together? At a certain point I would have like 10 separate Claude conversations, and the general wireframe idea in Figma based on screenshots.

In a next step, I would download the code for all the UI parts in Claude and copy that code into a fresh Next.js app. I would create an overview page where would I clearly link to every UI part. Having an overview page is a common thing to do in wireframes so that a client can validate all the possible UI paths.

Now came the part where different UI parts needed to be combined. I would use Cursor to have an understanding of the whole codebase and start connecting every part of the app with a sidebar component. To work faster, I would use a dictation app called wispr flow, to give detailed prompts inside of Cursor.

To work faster, I also never bothered with changing much of the defaults that came out of Cursor. Even though I have opinions on their tech stack, it was ultimately quite irrelevant for me, because

- I am not actually working in the code; I am prompting and changing stuff

- This is a prototype meant for validation, not for production

As a result, we created a prototype with 7 different modules, there are two main user perspectives to it (a user facing public part and an expert backoffice app part), and 20 different connected UI screens. The screens themselves had a moderate complexity level (a calendar, some CRUD modules for content, a block-based editor) with one of them having a bit more complexity (the part where you edit your profile with a live preview next to it).

I was so enthusiastic about this workflow. The client was super stoked with the result.

I asked him to use the prototype to test with his prospective users and a few days later he came back with enthusiastic reports, where he showed the prototype to the prospective users and they could really imagine what it was like to use the app.

My goal of getting a user-testable app prototype was achieved; and we did this work in a few days, instead of what used to take a few weeks (excluding an initial design phase).

A presentation-version of this blog post with more details was delivered earlier this month at a private company presentation.

This blog post shows the seeds of a new workflow that will definitely influence how we makes prototypes at Obra. Have a software company and curious to ship faster? Get in touch.