I am doing an investment in taking Screenshot to Layout to to the next level. I am working together with a few freelancers, each skilled in their own right, from making plugins for graphic design apps to advanced Javascript wizardry. Together we will improve the plugin.

I want to write a bit of a “devlog” so I don’t forget what we are doing. Maybe it’s bad luck to talk about your plans, but OK. I am trying to build in public, and I found that that I didn’t write down enough while doing Obra Icons.

Right now we are in a planning phase where I am setting milestones and attaching min/max budgets to these milestones.

- First, we will lightly research alternate solutions. Is the Azure platform the perfect platform to keep building on? Should we use Tesseract? Or something else? I am pretty sure we will keep building on Azure, but it feels like the right time to assess – with the knowledge of the team at hand – what alternate solution can be found

- A very senior programmer friend I’ve known for a looooong time will likely take a close look and use his 30+ years of programming experience to asses the above.

- Next, we will do 2 parallel tracks: the first track is to improve the algorithm behind STL. What I call the “algo” actually consists of 2 parts.

- The first is what output is gained from “the machine”. Microsoft has a computer vision API, which, given an image with text, gives you back the coordinates of said text and information about what it detected as lines, words or paragraphs. The output of this is sometimes pretty good and sometimes has problems, so there is code that has to work around those problems.

- A reason to stay with Microsoft Azure is that Azure makes an update to their engine every 6 months. A quick test of the latest model (31-10-23) is promising.

- The second part is the code that processes said text + coords information to the canvas. That code takes the raw information and tries to make sense of it. For example, if the bounding box of a line of text spans 20px between y1 and y2, and it’s only detected as a single line of text (no paragraphs), there is a likelihood that it should be rendered back to the canvas as 16px text. If it’s a paragraph, the calculation is a little bit different, because now we are also taking into account space between lines.

- What kind of improvements can we make then? I will be the first to say that the code that I wrote myself is not very good. It works, and I am quite proud of it (its even in TypeScript!), but my hope is that a second look by a skilled development team will bring the code to a new level, also enhancing the OCR output. Right now, there are various small problems which I discuss in detail in this video. To spare you the watch, they are a) inaccurate positioning b) inaccurate text sizing of text layers that look similar in the source screenshots c) the recognition engine mistaking typical icon shapes as single characters (o, v, > etc) and d) the system not at all working with vertically oriented text (90 degree rotated text)

- The first is what output is gained from “the machine”. Microsoft has a computer vision API, which, given an image with text, gives you back the coordinates of said text and information about what it detected as lines, words or paragraphs. The output of this is sometimes pretty good and sometimes has problems, so there is code that has to work around those problems.

- The other track is about bringing the plugin to be a full inside-Figma experience. Right now, you have to register on an external website, and the process feels a bit disjointed. Ideally, you can trial the plugin without having to register at all, and then register if you use it a lot. I still want to keep that logic to track people’s usage and so my cloud bill does not go overboard.

- When we have combined these two tracks, we need to get the word out about the plugin to more people. The third track is a small marketing track which involves improving the visual design of the website, promoting the idea to our combined network and possibly the creation of a clear demo/marketing video.

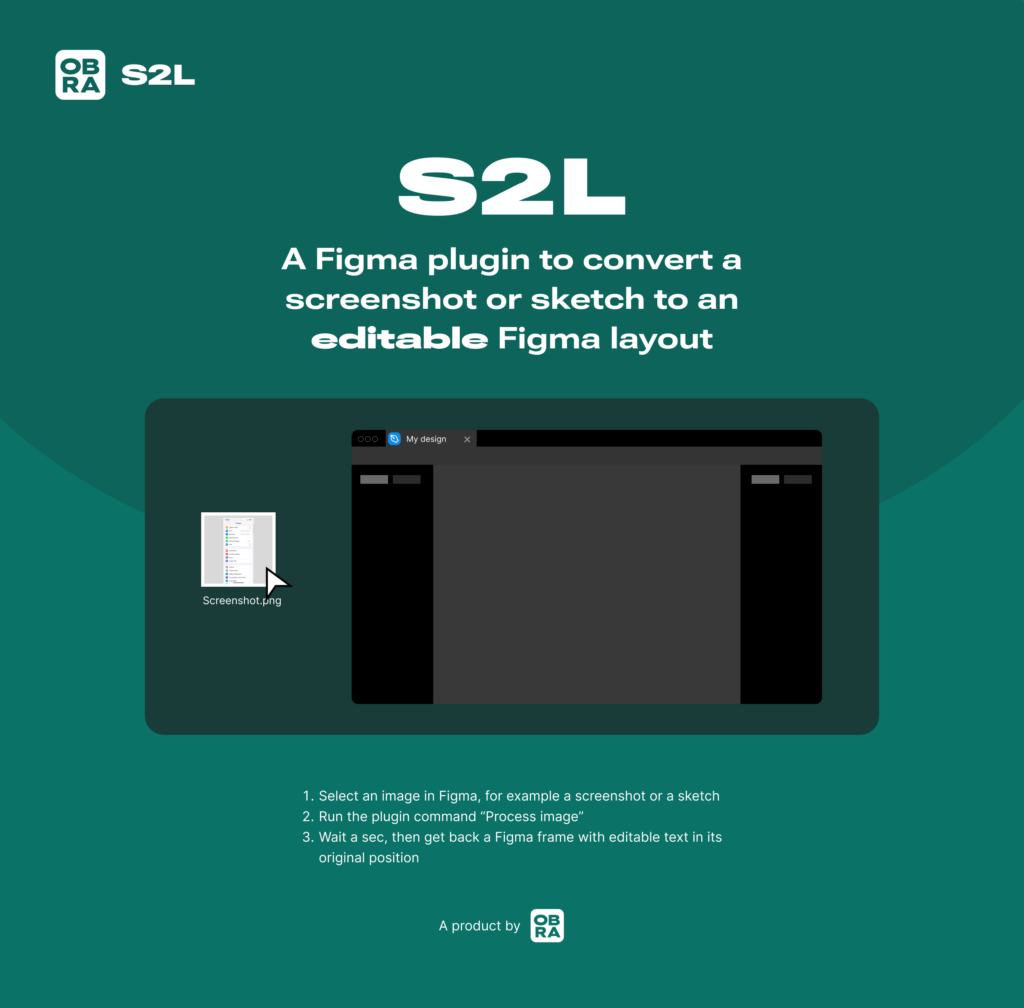

This is the plan for a first phase of the plugin development, which is slated for release in +-3 months. In the meantime, you can already try out the current version of the plugin for free. It will be free for at least the next full year, so make sure to give it a try. It’s here on Figma community.