As a personal challenge, I tried to do do a few things with the Figma API.

There were 3 main reasons to do so:

- I am interested in design automation. I feel like we are sometimes doing a lot of non-work in design. How can we automate repeat tasks so we can work on the actual work? (I love Jon Gold‘s work on this topic)

- I feel like my Javascript skills have been getting worse instead of better. I can’t recall the last time I even wrote a simple script myself, so it’s time to step up my Javascript game.

- I am currently giving Figma workshops (the first one was just this week) and I feel like I should know everything there is to know about Figma. I didn’t know that much about the API and now I feel like I know a thing or two.

- There will be public workshops later this year – possibly next year. If you are interested get in touch!

Since it was mostly for work I can’t really share too much of what I did, but the general idea is that it’s about design automation, where I am using the Figma API to bring things from the design side to the code side automatically.

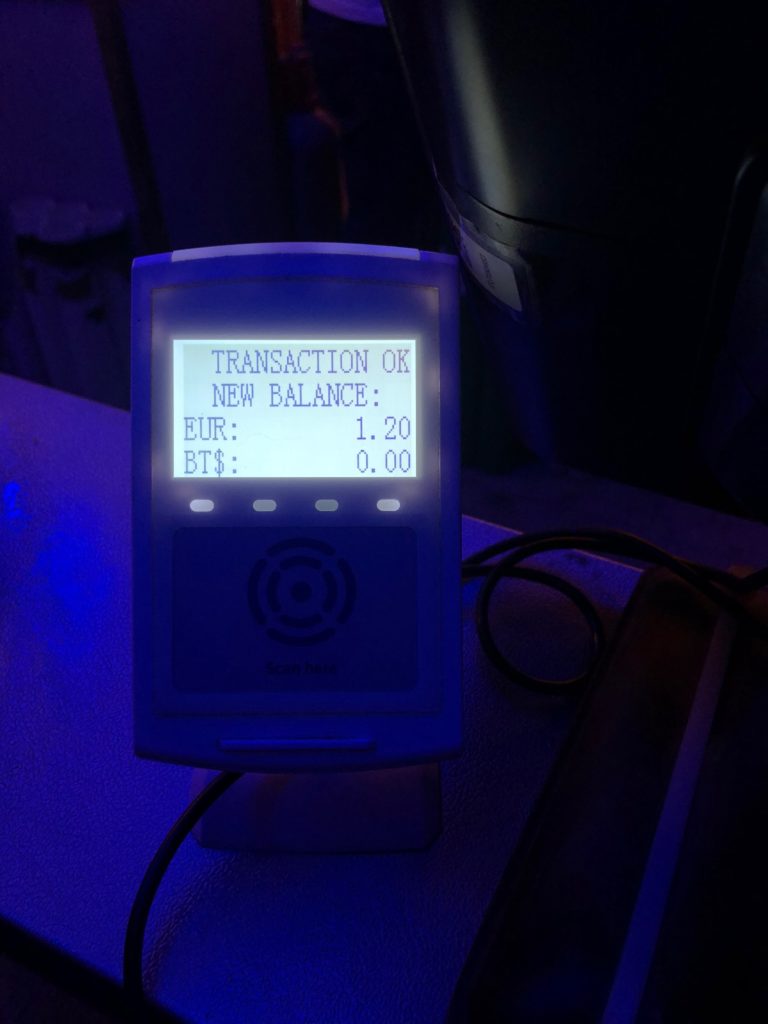

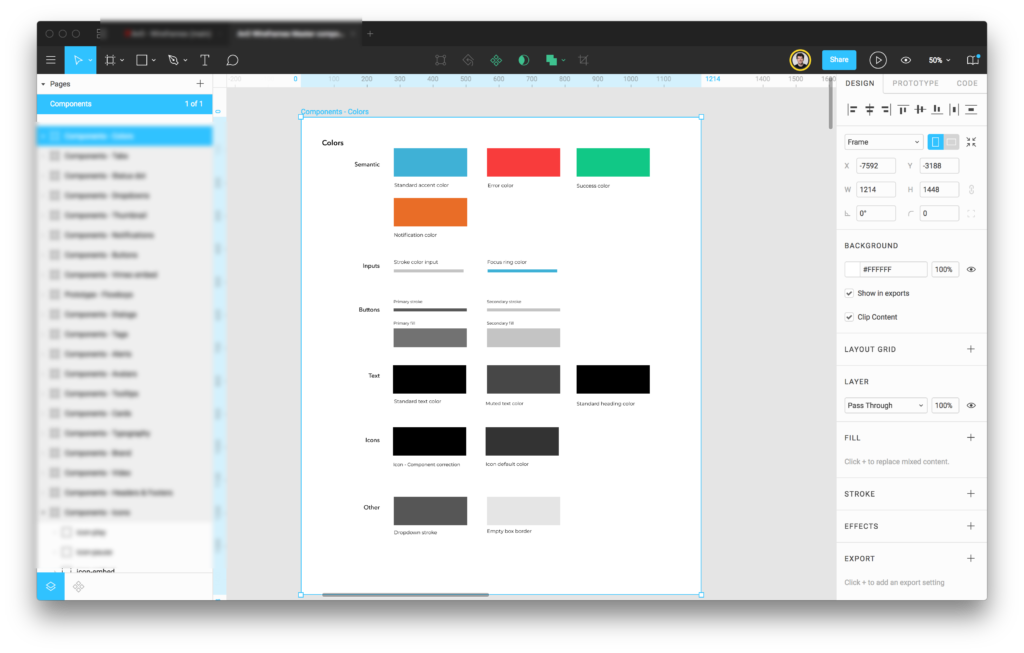

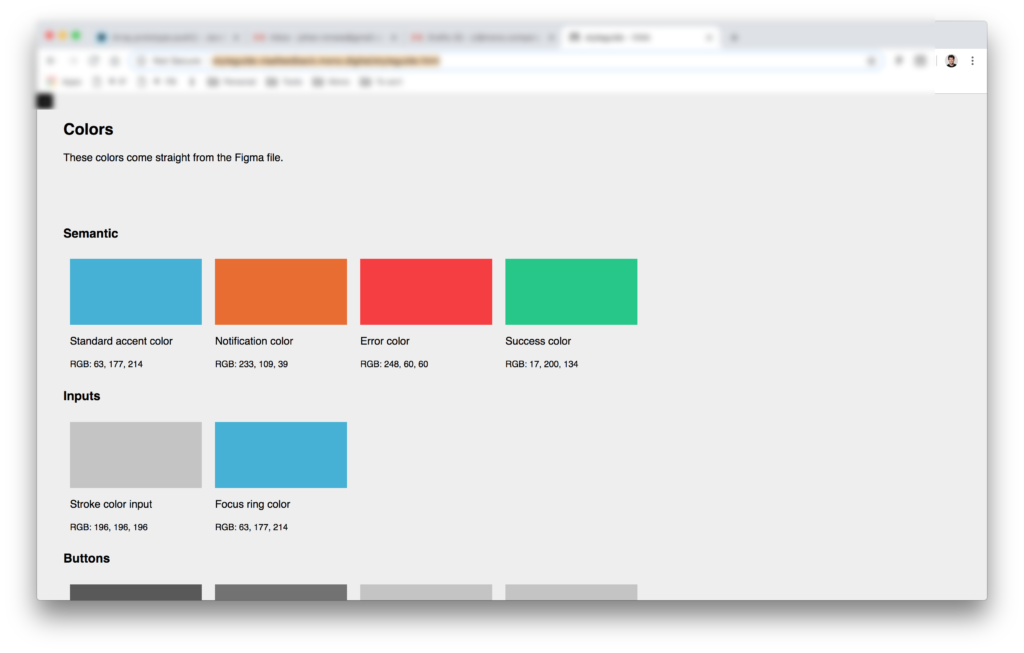

Here’s a small example, where I coded automatic listings of color palettes generated from a Figma file. In Figma the artboard would look like this:

And then on the website it would look like this:

These are colors from a wireframe by the way, so not really a final design.

I am also working on a way to get SVG files in your designs without needing to manually export them (as described by Github). This is harder than I thought, and will need some more time.

The goal of this post is to summarize some of the resources I used to learn so you don’t have to go looking in every corner of the internet if you want to try something yourself. I would also like it if other people shared some more of their work because this seems like the nichest of topics.

Your first stop if you are interested should probably be the Figma API explorer. I didn’t see it at first, but if you look closely there are input fields on the page in which you can enter some text like your personal access token and a file key. You can then use this page to get example JSON back so you have an idea of the data you will be dealing with. It’s important to note that there are different API endpoints which give you different types of data.

With the images endpoint you can get images back; with the file one you can get your entire file structure; with the file node one a little part of your file structure.

This becomes important once you start coding because you will notice soon enough that getting an answer back from the API can take quite a while – and as the size of your file grows, the time it takes to pull it in grows as well. This means that in practice you will have to optimize your requests or cache query results if you want to make something that’s fast enough.

The next thing you’re going to want to do is check out the Figma API demo repo, particularly the projector demo. This demo can basically teach you how to pull in images from Figma which should be useful to any design team needing feedback on their work.

In the index.html file of this project we can see some interesting functions which I could understand and adapt to my own project. I also used the progress bar code from this page.

I find this style of Javascript a much simpler way to code than something like the figma-js npm package. But if you are into that kind of advanced JS, whatever floats your boat!

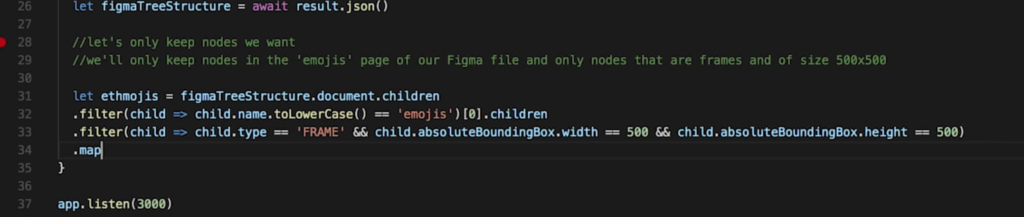

Another thing that really helped me is this Youtube video, where the author filters the JSON data she gets back from the Figma API.

This tutorial led me to wanting to understand Array.prototype.filter() and to have a better understanding about Javascript objects. I went down the rabbit hole with this one and started learning a lot of things.

The biggest learnings for me were Object.entries, flat(), and the aforementioned filter().

For example, I wrote this kind of code to grab simple properties from a document:

// Using file node endpoint

var documentName = Object.entries(apiResponse.nodes).flat()[1].document.name;

var documentWidth = Object.entries(apiResponse.nodes).flat()[1].document.absoluteBoundingBox.width;

And combined it with this kind of code to reduce a big file structure down to a smaller one, which I could then parse to my own structure and render back to the DOM using other code.

// Using files endpoint

// Grab all my pages

var pagesList = Object.entries(apiResponse.nodes).flat()[1].document.children; // Filter my pages to find a specific page

var pagesListFiltered = pagesList.filter( function(item) { return item.name == "Components - Colors" });

// On this specific page, list my swatch group “groups”

var swatchGroups = Object.entries(pagesListFiltered).flat()[1].children.filter( function(item) { return item.name == "Swatch category" }); That’s what I have to share for now. I might write some more if there is interest.

Overall, I am quite happy with this. The Figma API is really cool and I think the skills I picked up now will be useful when we are focussing on designing with data (both in the new Sketch 52 and using Bedrock), React components in Framer X and more.